This is my first blogpost 🎉 as part of my new year’s resolution (2020 🤦♀️) to contribute more to the open-source community.

Intro

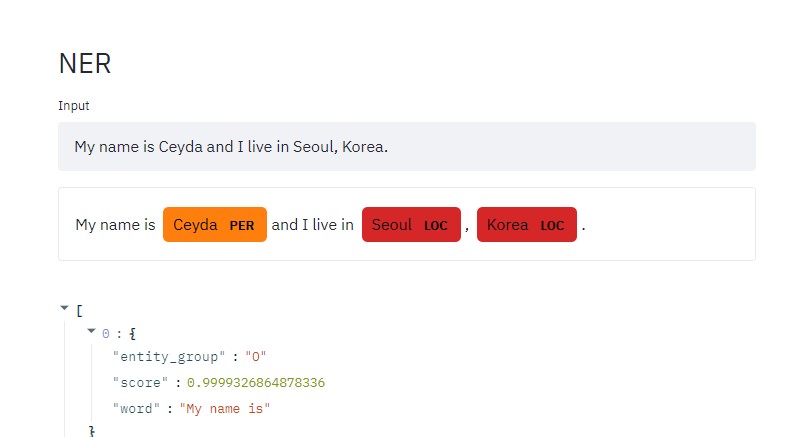

Do you have an NER model that you want to make an API/UI for super easily and host it publicly/privately? Check out this public demo to decide if this is what you want.

Requirements

Install the necessary libs (The usual)

Clone my repo

git clone https://github.com/cceyda/lit-NER.git

cd lit-ner/examples

pip3 install -r requirements.txtwhich has simple starter scripts to get you started.

Super fast start

In case you don’t have a pretrained NER model you can just use a model already available in 🤗 models. Just take a note of the model name, then look at serve_pretrained.ipynb* for a super fast start! Or keep reading to deploy your own custom model!

Details

I will keep it simple as the notebooks in the example directory already have comments & details on what you might need to modify.

Prepare your model

There are many tutorials on how to train a HuggingFace Transformer for NER like this one. (so I’ll skip)

After training you should have a directory like this:

Now it is time to package&serve your model.

Torchserve

Torchserve is an official solution from the pytorch team for making model deployment easier.

Some feature highlights; - Automatically batching of incoming requests, - You can easily spawn multiple workers and change the number of workers. It also respawns a worker automatically if it dies for whatever reason. - model versioning - ready-made handlers for many model-zoo models. among many other features.

We will use a custom service handler -> lit_ner/serve.py*. Although there is already an official example handler on how to deploy hugging face transformers. I have gone and further simplified it for sake of clarity. Feel free to look at the code but don’t worry much about it for now.

I plan on doing another post on torchserve soon so stay tuned!

Run the examples/serve.ipynb* notebook. It has all the details you need to package(aka archive) your model (as a .mar file) and to start the torchserve server.

Streamlit

It wouldn’t be an overstatement to say I’m in love with streamlit these days. Although running this demo requires no knowledge of the library I highly recommend you give it a try.

I plan on doing a post on streamlit in the future!

This command will start the UI part of our demo cd examples & streamlit run ../lit_ner/lit_ner.py --server.port 7864

Then follow the links in the output or http://localhost:7864

And if everything goes right TA~DA you have a demo! 😃

Q&A

Questions & Contributions & Comments are welcome~ Leave them below or open an issue.

Follow me on Twitter to be notified of new posts~